Subba Reddy Oota

Neuro-computational models of language comprehension: characterizing similarities and differences between language processing in brains and language models

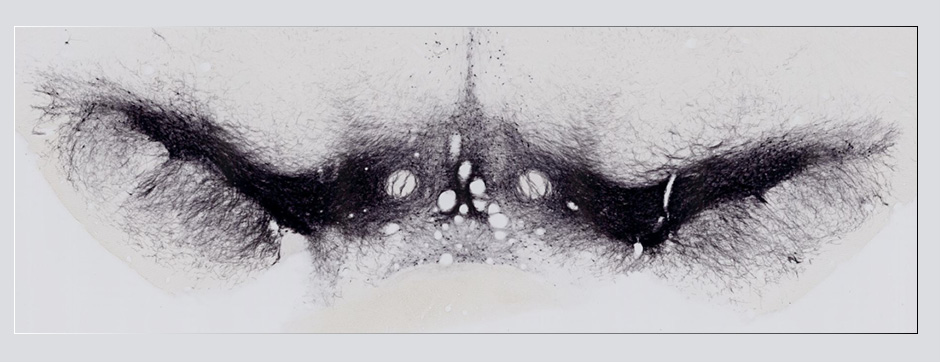

avril 2024 Directeur(s) de thèse : Xavier Hinaut Résumé de thèseThis thesis explores the synergy between artificial intelligence (AI) and cognitive neuroscience to advance language processing capabilities. It builds on the insight that breakthroughs in AI, such as convolutional neural networks and mechanisms like experience replay, often draw inspiration from neuroscientific findings. This interconnection is beneficial in language, where a deeper comprehension of uniquely human cognitive abilities, such as processing complex linguistic structures, can pave the way for more sophisticated language processing systems. The emergence of rich naturalistic neuroimaging datasets (e.g., fMRI, MEG) alongside advanced language models opens new pathways for aligning computational language models with human brain activity. However, the challenge lies in discerning which model features best mirror the language comprehension processes in the brain, underscoring the importance of integrating biologically inspired mechanisms into computational models.

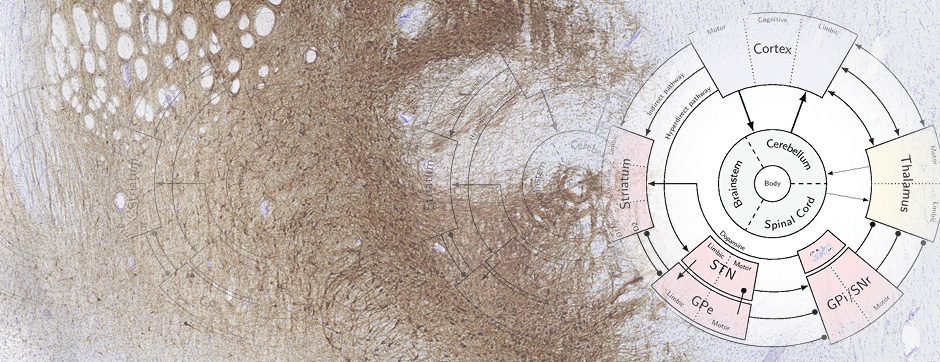

In response to this challenge, the thesis introduces a data-driven framework bridging the gap between neurolinguistic processing observed in the human brain and the computational mechanisms of natural language processing (NLP) systems. By establishing a direct link between advanced imaging techniques and NLP processes, it conceptualizes brain information processing as a dynamic interplay of three critical components: “what,” “where,” and “when”, offering insights into how the brain interprets language during engagement with naturalistic narratives. The study provides compelling evidence that enhancing the alignment between brain activity and NLP systems offers mutual benefits to the fields of neurolinguistics and NLP. The research showcases how these computational models can emulate the brain’s natural language processing capabilities by harnessing cutting-edge neural network technologies across various modalities—language, vision, and speech. Specifically, the thesis highlights how modern pretrained language models achieve closer brain alignment during narrative comprehension. It investigates the differential processing of language across brain regions, the timing of responses (HRF delays), and the balance between syntactic and semantic information processing. Further, the exploration of how different linguistic features align with MEG brain responses over time and find that the alignment depends on the amount of past context, indicating that the brain encodes words slightly behind the current one, awaiting more future context. Furthermore, it highlights the biological plausibility of learning reservoir states in echo state networks, offering interpretability, generalizability, and computational efficiency in sequence-based models. Ultimately, this research contributes valuable insights into neurolinguistics, cognitive neuroscience, and NLP.